Deep Learning Lidar Odometry

2018

Traditional Lidar Odometry is incredibly difficult due to factors such as motion distortion, occlusions, laser beam divergence, and other factors. Instead of manually modelling these difficult scenarios, data-driven methods such as deep learning can be used.

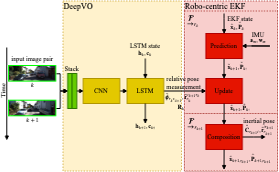

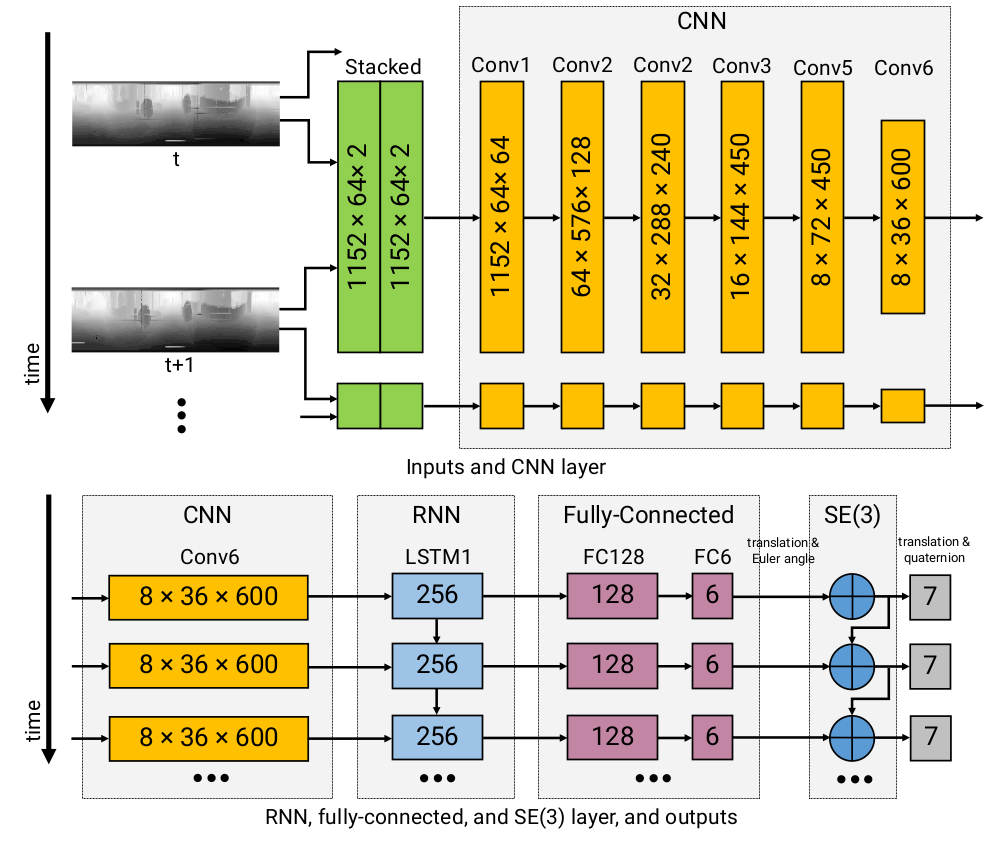

The idea stemmed from a paper published in The International Journal of Robotics Research (IJRR) [1]. In this paper, they used a convolutional-recurrent neural network architecture to learn visual odometry. The architecture allows the network to learn system dynamics and propagate states through multiple time steps. We modified this architecture for Lidar Odometry.

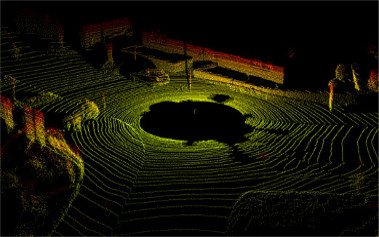

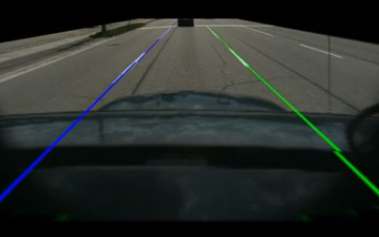

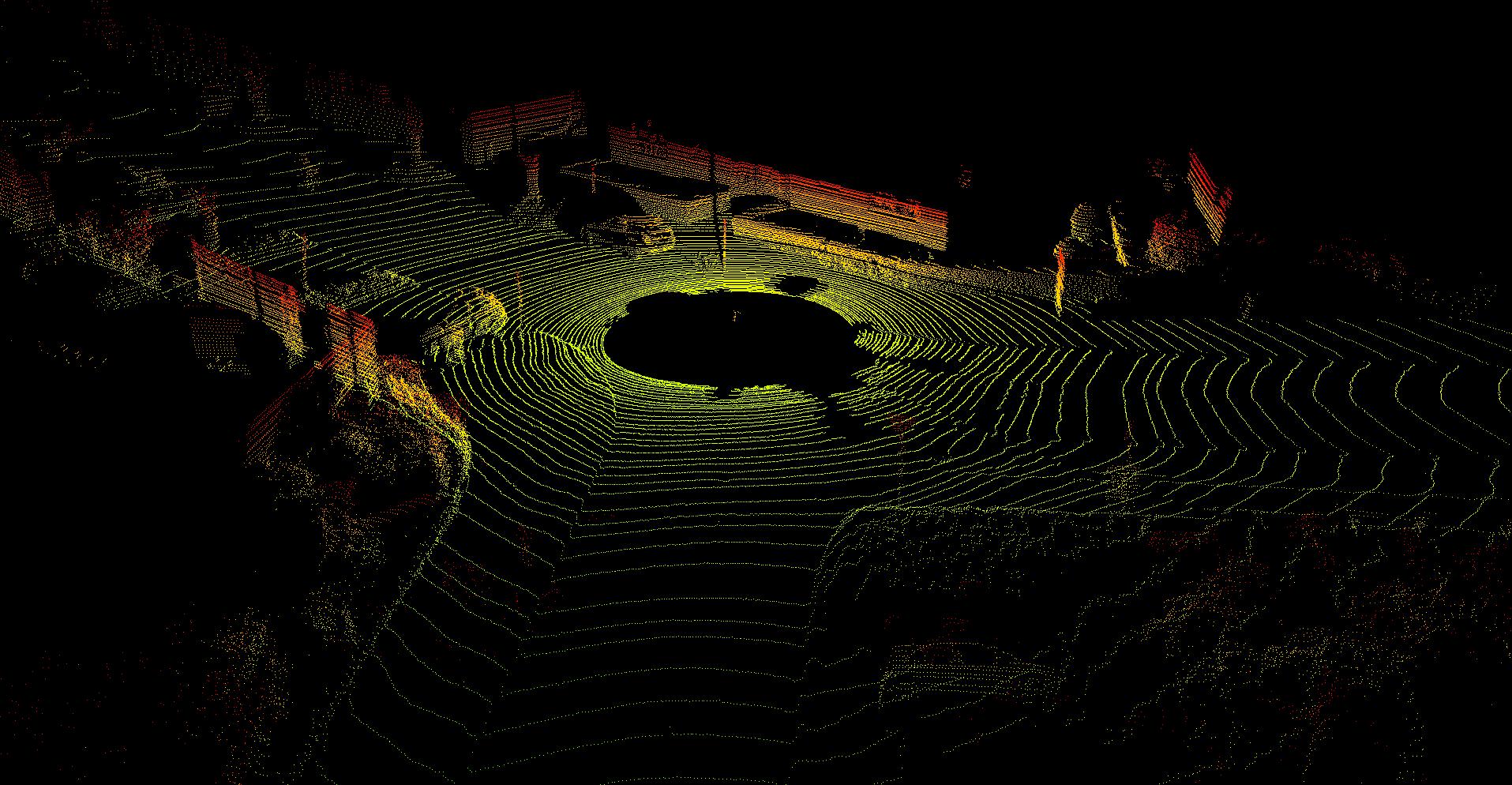

We obtained Lidar data from the KITTI dataset which uses a 64 beam Velodyne Lidar. The Lidar data is preprocessed into images. Each row of the image ecnodes a single laser beam, and each column encodes different azimuth angle. The distance and intensity data are stacked as two channels of an image. The sequence of images are passed into the network, and at the output are Lidar poses. The details regarding this project can be seen in the report.

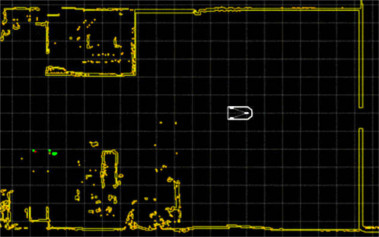

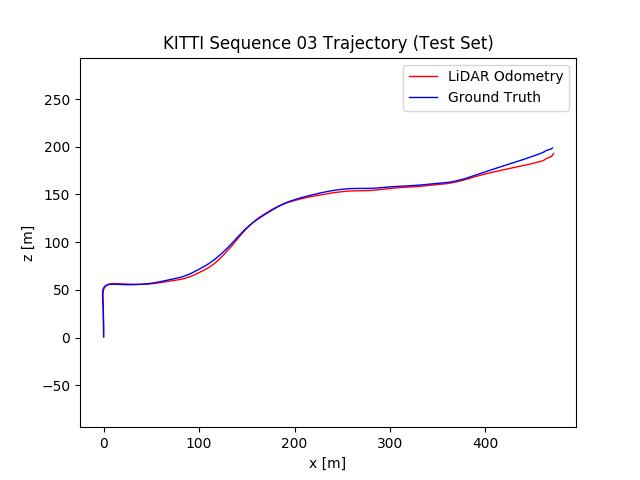

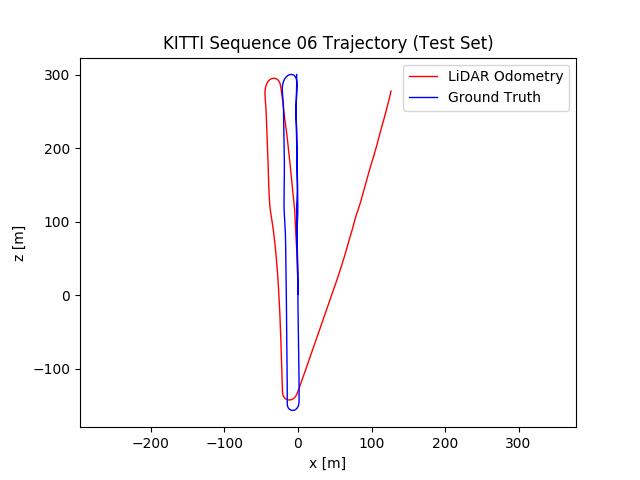

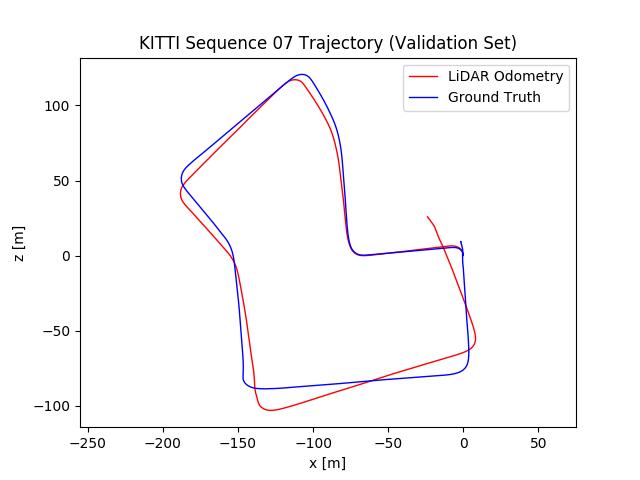

The network is trained with frame to frame loss as well as loss for a sub-sequence. The network is able to converge and some of the odometry results can be seen below. The results are not as good as some of the classical methods such as LOAM [2], but we showed that neural networks are capable of estimating odometry from Lidar and we are excited to keep improving our results.

[1] S. Wang, R. Clark, H. Wen, and N. Trigoni, “End-to-end, sequence- to-sequence probabilistic visual odometry through deep neural net- works,” The International Journal of Robotics Research, vol. 0, no. 0, p. 0278364917734298, 0.

[2] J. Zhang and S. Singh, “Loam: Lidar odometry and mapping in real- time,” in Robotics: Science and Systems Conference, July 2014.